I have been planning to start my personal blog site for quite some time, but until now I’ve never found the right moment to make it happen. Finally, I decided to kick things off by sharing my security research on the Ledger Nano S, which I conducted back in 2018. The technical details were already published at Black Hat USA in 2018. With this blog, my main objective is to consolidate all my research in one place. For those who haven’t seen my presentation or heard about the vulnerabilities I found in the Ledger device, I hope you find this blog post to be an informative read.

Background

With the rapid increase in the popularity of cryptocurrencies during the 2010s, several companies emerged to develop secure devices for storing and using cryptocurrencies, without requiring the storage or processing of secret keys on a user’s computer. In 2016, the Ledger Nano S became one of the first devices to be introduced. It not only utilized a Secure Element to store cryptographic secrets but also supported multiple third-party applications for different cryptocurrencies, which were considered untrusted. A newly developed device with a potentially large number of bugs? Check. A large attack surface through the app API? Check. And, finally, high-valued assets stored on the device with a need for security? Check! This made me very curious and excited to see what kind of bugs I could find. My research entirely ignored the cryptocurrency aspect of things and only focused on device security.

Ledger Nano S architecture

The Ledger Nano S is a USB-connected device protected by a user PIN. During the initial setup, the device prompts the user to set a new PIN, and subsequently generates a new secret seed within the device. The user is then able to save a backup of the seed, while the private keys derived from the seed remain securely stored within the device.

To utilize the device, users can connect it to a PC via a USB port. Communication with the device is established by entering the PIN, and further interactions can be facilitated through a browser extension.

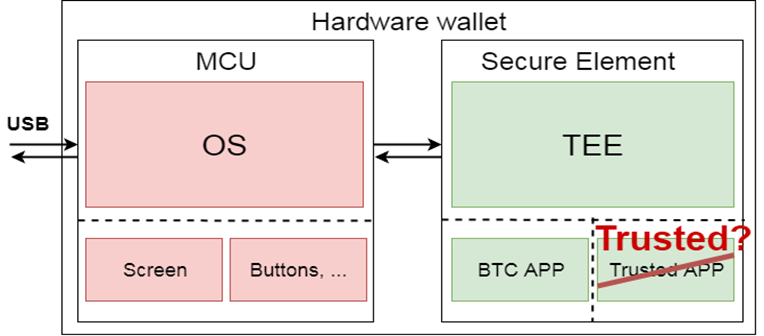

The Ledger device internally consists of two main components. Firstly, there is an untrusted MCU, an STM chip, providing support for USB communication, controlling the display, and button control. The second component consists of a Secure Element running the BOLOS operating system and hosting all various cryptocurrency applications.

These applications running on the device can be obtained from a store and may be developed by third parties. It’s worth noting that both the MCU firmware and the SDK for Secure Element applications are open source, promoting transparency and community collaboration. However, the BOLOS operating system remains proprietary, as it uses proprietary HAL provided by the Secure Element to ensure the overall security of the system.

The Ledger security blog considers a variety of potential attackers, encompassing compromised PCs with malware, theft and physical attacks, as well as supply chain attacks. To counter these threats, the Ledger Nano S implements a range of security mechanisms and principles:

- Secrets remain within the Secure Element (SE) and are never exposed.

- The device is tamper-resistant, providing an additional layer of protection against physical access.

- Secure software updates are employed for both the MCU and SE, ensuring the integrity of the device’s firmware.

- Applications are isolated from each other, preventing unauthorized access to assets of other applets.

Attacking device from a SE app

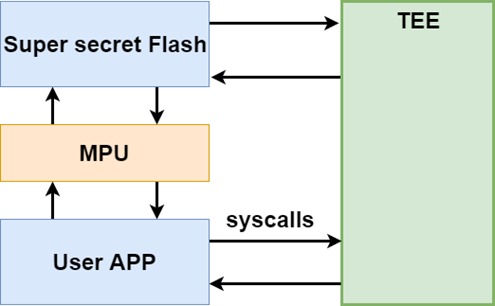

The Ledger applications have access to a portion of RAM and Flash memory, while the rest of the memory is protected by an MPU (Memory Protection Unit). BOLOS offers more than one hundred system calls to provide various functions, such as cryptographic functionality and access to peripherals. However, the abundance of system calls and the complexity of some of them make them an obvious attack surface that requires careful consideration.

Since the operating system on the Secure Element is proprietary, and only the API is accessible, I proceeded to build a series of test apps. Thankfully, the provided SDK offered a method to create and load applications onto the device, facilitating testing of the system calls.

My initial idea was to examine whether the pointers received from the application were adequately validated. A failure to validate any incoming pointer could potentially enable an application to gain arbitrary read/write access to the entire OS.

Dereferencing null pointer

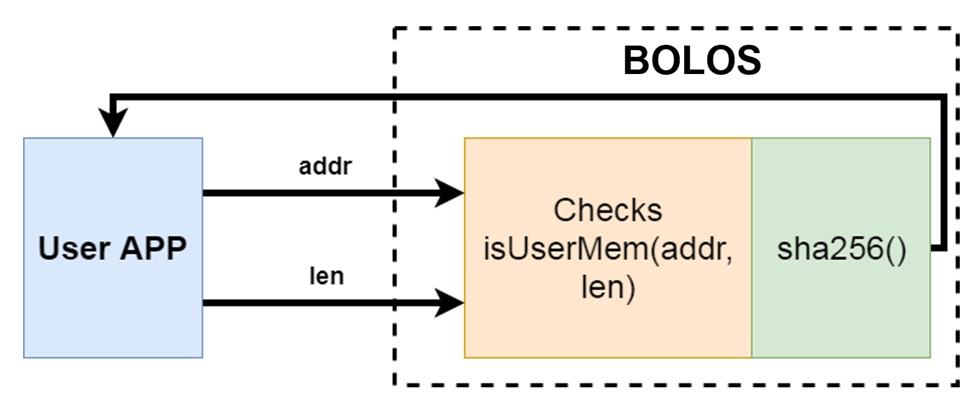

The majority of API calls that receive pointers to user data process them and return an exception if the pointer is not within a valid user memory region. Specifically, the exception SW6404 is returned to indicate a memory access violation.

To ensure that the memory range checks are implemented correctly, I developed a simple test application. This application utilized an API call for SHA256 hash computation, selected randomly, with the only requirement being that the call received a pointer and used data from that address.

In the test application, a loop was created to increment an address, and subsequently, the SHA256 API call was invoked with the updated address value. The expected outcome was that the pointer check would be conducted, and exceptions would be thrown for all addresses that did not correspond to the flash or RAM regions of the application.

To my surprise, the initial call with address 0x00000000 did not result in the expected SW6404 exception; instead, it returned a hash. However, for all other addresses that did not belong to the application, the expected exception was thrown.

Upon further investigation, I discovered that the null pointer was not being checked for some unknown reason, and the zero page was mapped in and contained valid data.

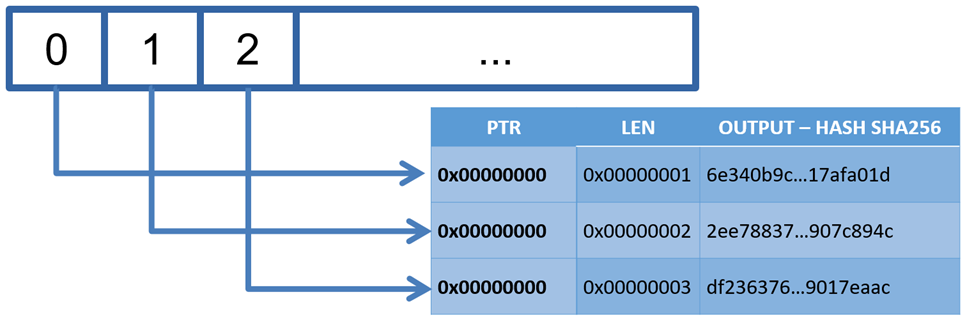

Given this finding, I decided to repeat the call with a null pointer and lengths of 1, 2, and 3 bytes. Here are the hashes I received for each case:

From the responses, it became apparent that, for some reason, the null pointer was not checked in the same manner as other addresses. Instead, it was directly used by the system call. The process of exploiting this vulnerability for memory disclosure is quite straightforward:

- Begin with a null pointer and zero length.

- Increment the length by one byte.

- Obtain the SHA256 hash of the data.

- Brute force through up to 256 possible values to guess the byte.

- Keep track of the correctly guessed bytes.

- Repeat steps 2 to 5.

Since only one byte needs to be guessed at a time, this process is quite feasible and can be efficiently carried out for the entire memory. Unfortunately, the system call ceases to return data back to the application after a length of 8 KB. This limitation is likely due to the syscall’s constraints and not security-related reasons. Nevertheless, this bug alone was sufficient to retrieve a substantial portion of memory that should not have been accessible to any application.

The first bytes leaked from the zero address are:

00 28 00 20 81 20 04 00 85 20 04 00 6F 2D 00 00.

Given that the architecture of the Secure Element is ARM32, we can readily identify the vector table, with the initial stack pointer located at 0x20002800, as expected. However, due to the limitation of only retrieving 8 KB of the code, we do not have access to the complete OS code for reverse engineering at this point, but I was happy to have a first finding already and continued with a similar approach for some other system calls.

Partial memory disclosure in cx_hash syscall

Continuing with a similar approach, I attempted to identify a syscall that not only utilizes the data at the application-provided pointer as a simple array of bytes but, instead, performs a more complex structure parsing. One such syscall is cx_hash, which receives pointers and lengths for input and output buffers, as well as a pointer to a hash context. This hash context comprises a header that, among other things, specifies the algorithm to be used, as depicted below:

struct cx_hash_header_s {

cx_md_t algo;

unsigned int counter;

};

I created a similar test application that incrementally iterates over pointers to illegal memory, used for the hash context in the cx_hash syscall. The expected outcome was that, for all illegal pointer values (excluding the null pointer, which was already found to be handled incorrectly), the thrown exception would be SW6404, indicating permission denial.

However, in practice, the returned exceptions were not consistently the same. Some disallowed addresses triggered an exception indicating an illegal algorithm, while for others, the exception correctly indicated that memory access was not permitted.

Though I had no access to the source code of the syscall application, it appears that the implementation follows a similar structure to the following pseudocode:

if (!known_algo(context->algo))

return [invalid hash algorithm];

if (!access_allowed(context, needed_len(context))

return [security error];

Instead of first checking the pointer to ensure that the destination is allowed, the algorithm type is retrieved first and verified for validity. Subsequently, the length of the context structure, dependent on the algorithm, is computed, and only then are the memory access permissions checked.

The reason for this bug is understandable, as the entire structure cannot be checked right away, and the algorithm must be determined first. However, the crucial check to ensure that the header itself is at least within user memory was missing.

This flaw enables attacker-controlled applications to gain partial memory disclosure for any address, encompassing OS code and data, secret keys, flash contents, and more. While the attacker fully controls the address, the disclosed information is somewhat limited, only revealing the byte at the specified pointer between 0-8 (resulting in a permission denied exception) or between 9-255 (indicating an illegal algorithm). Nonetheless, a malicious application could exploit this vulnerability to retrieve some information from other applications or the OS itself.

Debug app installation flag breaking flash isolation

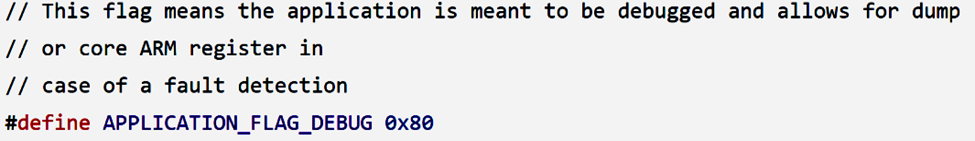

Continuing from the syscalls, my focus shifted to the installation flags and permission model employed by the OS during the application installation. One of the supported installation flags, as outlined in the SDK, is known as APPLICATION_FLAG_DEBUG.

The description of the flag doesn’t provide a clear understanding of the exact difference between regular and debug applications. Nevertheless, I decided to test it and observe any noticeable differences without prior knowledge of how debugging is intended to be used. Fortunately, the flag can be set for any third-party application and doesn’t necessitate an issuer signature or additional permissions.

Upon further investigation, I found that by setting the flag in the Makefile, the MPU configuration differs from a regular application. It appears that the flag extends the MPU memory region of the flash, allowing the application direct access to a larger memory area. However, the memory accessible via system calls remains unaffected.

Without the debug flag, a user application typically has access to the memory region from 0x00131000 to 0x00134000, corresponding to 12 KB of flash memory. However, once the flag is set during installation, the accessible memory region increases to 65 KB, spanning from 0x00130000 to 0x00140000. As a result, the application can read memory that belongs to other applications or even the system memory.

By installing the debug application on a wallet without any other applications, I managed to obtain a partial memory dump starting from 0x00120000. This data appears to belong to the dashboard application, indicated by the presence of ASCII characters containing the BIP39 list of words used to derive the 24-word recovery phrase. Furthermore, the memory dump also revealed some test vectors for elliptic curves.

While this finding is fascinating for learning more about the system, it alone is not enough to compromise other wallets. To transform this finding into an exploitable vulnerability, it must be combined with another issue, which will be described in the following section.

Resetting the wallet does not clear the user flash

To enhance the usability of the device, in situations where a user forgets the PIN code or decides to permanently delete the device’s secrets, it is programmed to automatically wipe the secrets and allow for reconfiguration after three incorrect PIN verification attempts. However, I observed that despite entering the PIN incorrectly three times, the preinstalled and custom applications remained intact. The video below showcases the menu presented to the user after three incorrect PIN attempts and after the device’s secrets have been wiped.

The Ledger security blog explicitly mentions that BOLOS wipes the secret seed and all relevant keys if the failed PIN attempt counter reaches the limit. However, there is no information provided about the state of user applications. While applications are not intended to store secret keys in flash memory, and most of them rely on dedicated syscalls to derive keys at runtime, there remains a possibility of sensitive information being present in the flash memory.

Given the debug flag vulnerability’s ability to read the flash memory of other applications, there could be a path for potential attack, allowing an attacker to retrieve any sensitive information stored in the flash memory of installed applications.

The attack looks as follows:

- An attacker steals a user’s device

- The attacker tries to guess the PIN

- If fails (most likely), the device is wiped

- The attacker initializes device as new

- The attacker installs a malicious application with debug flag

- The debug applications allows the attacker to read part of the flash of the previous application

The only remaining question is whether there are any secrets that applications can store in flash. Fortunately, both the applications developed by Ledger and third-party developers are open source, making the answer just one grep away.

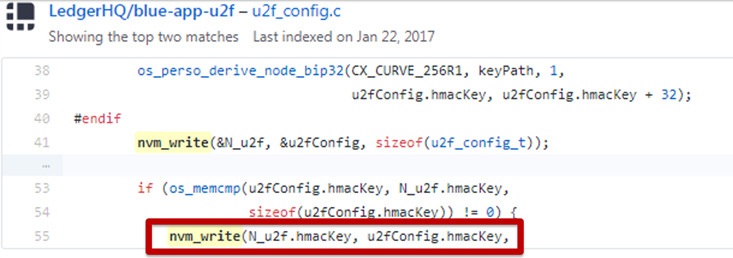

As it turns out, several applications store the secret keys directly in flash, instead of deriving them at runtime from the seed. Notably, the Monero wallet, along with the gpg and FIDO applications, follow this approach. To validate the feasibility of the attack and confirm that keys can indeed be retrieved from flash, a PoC was developed, focusing on the FIDO application.

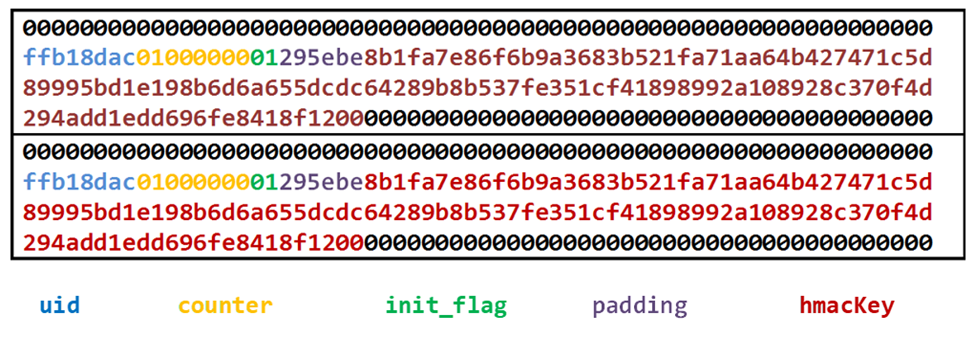

The dump below displays the contents of the data in flash for the FIDO application, both before and after the wipe. The data highlighted in red indicates the presence of the HMAC key for the FIDO application. This demonstrates the potential vulnerability and underscores the importance of addressing this issue to enhance the overall security of the Ledger device.

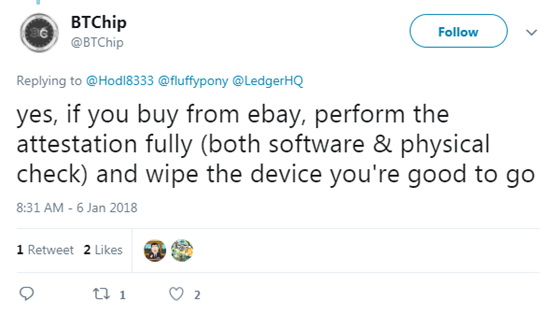

Supply chain attacks using Custom CA feature

In this post, we have discussed malicious applications and physical access attacks. However, there is one more attack path that Ledger considers, namely supply chain attacks. Concerns have been raised by twitter users regarding the safety of using a second-hand device purchased online. One of the Ledger cofounders addressed this question in the tweet below:

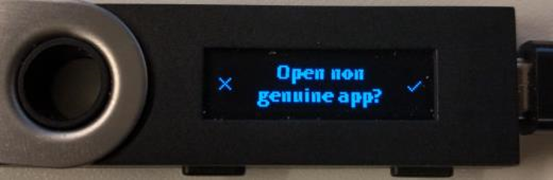

One relevant feature to the supply chain attack is the option to enroll a custom CA certificate on the device for development purposes. The necessity for custom CAs arises from the fact that developers, who need to load and test applications on a device, would otherwise be required to enter the PIN and confirm the application’s installation if a development key is used for signing. Additionally, a warning is displayed each time an untrusted development application is opened, requiring physical button confirmation to proceed.

The vulnerability is caused by the fact that not only does the application flash remain intact after the wipe, but the enrolled custom CA certificates are also not deleted. It’s essential to note that the original Ledger devices come with preinstalled Bitcoin and Ethereum applications by default.

This vulnerability opens up the possibility of a supply chain attack as follows:

- Delete the default applications.

- Utilize open-source code for Bitcoin and Ethereum applications, and introduce backdoors.

- Install a custom CA on the device.

- Reinstall the default applications, now with added backdoors.

- Enter the PIN incorrectly three times, triggering the wipe of the device’s seed and restoring its appearance to brand new.

- Repackage and sell the device.

With this attack, when a user receives the device, there will be no signs of malicious applications. The attestation process will pass, and when the device is provisioned, it will not indicate that the apps are non-genuine since the custom CA cert is still present on the device.

Results

A total of seven vulnerabilities were reported to Ledger, and these issues were promptly addressed in the subsequent release. These vulnerabilities showed how all three different attack paths could potentially compromise the device’s security and its implemented security features. In response to these findings, Ledger published a post detailing the vulnerabilities and the corresponding fixes.

Really exciting post, very informative! Can’t wait until you post more content.